Question answering (AI text prompting)

This action is the automated activity of the AI Workbench, you can click the wrench to navigate to the workbench and evaluate your settings.

Usage

Always uses the next action in the outgoing edge.

If an error occurs the activity error is used, which may occur if e.g. no internet connection is

available to contact the API endpoint.

Variables created

ai.prompt.result

This contains the result of the large language model returned based on the input prompt.

ai.prompt.history

A list of the messages that where sent to the LLM and what the LLM returned as an answer. This data is currently not usable as a simple variable since it's a directly usable unless some programming

Settings

Endpoint and Model name

By selecting one of the endpoints, a suitable model can be selected from the list of available models on that endpoint

User prompt

This contains the question that the artificial intelligence should answer. You can use variables from the workflow to add input which is forwarded to the AI. This text can also be adjusted from the workbench and variables may be selected from the menu.

Here is an example that makes use of the text functionality:

What is the weather in ${variable.city} tomorrow ${variable.daytime}.

Conversation rounds

The activity is able to track a conversation in case this is wanted (by default this is disabled). Please keep in mind that activating this option is not what you want for batch processing since this might mix things up due to the combination of messages that do not go together.

If this option is active, the question and the resulting answer is stored and

is delivered again the next time the activity is visited together with a follow-up question from

the next prompt.

For example if this is set to 3 messages, only the last three messages including the result of the

AI assistant is stored and the reset is discarded. This means the 4th time the activity is

started, the initial prompt is not sent to the AI endpoint anymore.

The system prompt is only taken into account the first time the activity is visited and the conversation is initiated.

Advanced

Temperature

What sampling temperature to use, between 0 and 2. Higher values like 0.8 will make the output more random, while lower values like 0.2 will make it more focused and deterministic.

Top P

This is called nucleaus sampling, an alternative to sampling with temperature; 0.1 means only the tokens comprising the top 10% probability is considered.

Maximum tokens

This the maximum length of text that the model should create. It is usually limited by the context size.

System prompt

Override the default system prompt from the settingws.

E.g. You are a helpful assistant.

Available variables

This section shows you the known variables that are available for inclusion in the prompt. Use the workbench user interface with bettter support for variable selection.

Example

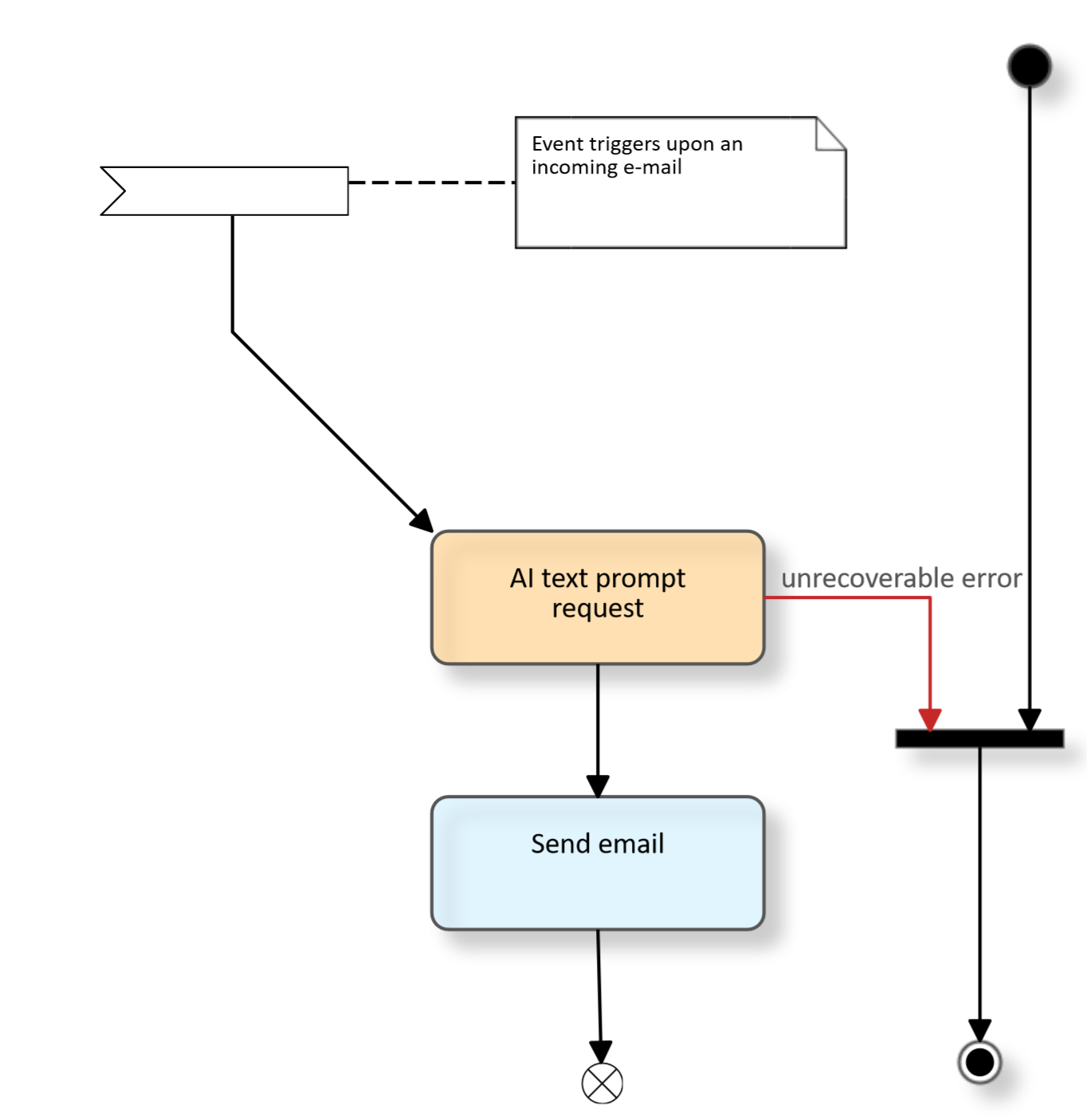

The example below shows how to model an E-Mail receiver that automatically responds via an AI answer.

When using such a scenario productively, you have to consider the rules and regulations that apply. See e.g. Generative AI checklist for more information.